In what sense do �feature detectors� detect

features?

Our perceptual system provides us

with an internal representation of the world, constructed after the sense data

are received through our sense accessories, where they are transduced by

receptors into electrical impulses in a data-reducing, neural coding process

culminating in the firing of action potentials. Debate about the development of

our perceptual mechanisms can be split into the nativist-empirist camps,

although most psychologists would now acknowledge at least some interaction

between biological and learnt processes; alternatively, top-down or bottom-up,

i.e. knowledge vs data-driven views of how we perceive objects, although again

some interplay between the two seems necessary and can be demonstrated by

�priming subjects�.

That visual perception is not an

easy task did not become apparent until similar feats of pattern recognition

were attempted with computers on images. Our visual system starts to shape the

raw data even as an image is formed on our retinas. Marr outlined four major stages in vision processing, the first

being a �grey-level description�, representing the intensity of light at each

point in the retinal image in order to discover regions in the image and their

boundaries.

A simpler example of delineating an

object�s boundaries might be the recognition of a square. The theoretical

explanation behind this involves a hierarchical feature net, where detectors

for the simpler elements trigger detectors for the more complex elements. In

this case, our feature detectors first recognise four straight lines of

perpendicular orientations, then four right-angles, all of which together

finally trigger the square detector which recognises the composite figure.

Before discussing what detection mechanisms

exist, I want to first consider the basis for neural coding of the

photoreceptors� input. The evolution of the eye can be seen as the culmination

so far of a string of tiny steps all the way from complete insensitivity to

light intensity. Like a camera, light streams in towards the retina, focused by

the lens, with the level controlled by a diaphragm (the iris) and into a

black-lined (the choroid coat, which forms the pupil at the front) area where

it stimulates photoreceptive cells (120 million achromatic higher-sensitivity

rods or 6 million trichromatic cones mainly found in the fovea).

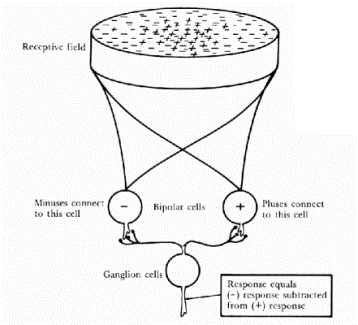

These receptors are linked to the

ganglion cells by bi-polar cells (which by an anatomical curiosity are in

between the retina and the lens), with between 10 and 1000 receptors per

ganglial cell, depending on how central the receptor is. Each of these

groupings forms a �receptive field� (which was defined by Kuffler & Barlow (1953) as �that area of the retina such that

light (or a pattern of light) falling within that area affects the pattern of

firing�), with excitatory and inhibitory inputs being spatially summed.

Ernst Mach and Edward Hering

provided evidence in the 19th century of lateral inhibition with their Mach

bands:

Even though the brightness intensity

of the middle section varies uniformly, our eyes perceive stripes of a

particularly dark area of black and a particularly bright area of white on

either side. This is due to on-centre/off-surround receptive fields, whose

inhibitory rings overlap and are laterally inhibited by their neighbours,

causing the spike rate to be particularly low on the dark edge and particularly

high on the bright edge. By coding for change (in the case of lateral

inhibition, spatial, but also temporal) the amount of data required to describe

a scene is drastically reduced. Selective coding of information about pattern

or the changes of light and dark at the retina (Ratliff & Hartine, Barlow)

is an example of redundancy reduction.

Huber & Wiesel (1959)

identified three types of cells, which respond to only very low-level, basic

features, such as lines of differing orientations, lengths and directions:

|

1.

simple cells |

orientation-tuned separate excitatory and inhibitory regions |

|

2.

complex cells |

orientation-tuned often

directional-selective no separate excitatory or inhibitory regions |

|

3.

hyper-complex cells |

orientation-tuned often

directional-selective no separate

excitatory or inhibitory regions selective for length (either one or

both ends) |

However, these on their own are not

feature-detectors, since although they are selective to certain lines/bars, the

responses of single cells are necessarily ambiguous, and modulated by a variety

of different stimulus dimensions (e.g. length, position, luminance, contrast,

wavelength, motion). Moreover, information about orientation is distributed

coded, i.e. preserved in the pattern of firing across many cortical cells,

rather than any single one.

Lettvin et

al (1959) first provided evidence

of a frog�s �bug detector�, certain ganglion cells in a frog�s optic nerve

which respond intensely to a small, dark object which moves around within a

certain retinal region. The obvious evolutionary explanation is of the frog�s

need to rapidly identify insects flying about within range. However, the level

of visual analysis varies, with higher animals such cats and primates having

relatively simple retinal detectors and far more work being carried out in the

visual cortex.

Perception is not simply a matter of

reproducing a 2-dimensional image like a photograph, but rather extracting from

it to form an internal representation of its 3-dimensional content. In humans,

this appears to come about through a multi-layered system of

redundancy-reduction at the retinal level, then simple and complex cells in the

visual cortex, whose rate of firing in conjunction with each other builds up an

increasingly sophisticated coding of the inputs contours, shapes and motions;

this information is then processed with reference to memory to develop

recognised patterns into objects and then scenes.